Model Context Protocol (MCP)

Motivations

The purpose of this document is to guide Data Scientists on using Anthropic’s Model Context Protocol (MCP) for LLM-based applications. While the protocol is relatively old for the AI space, having being released in November 2024, the infrastructure changes required are reasonably large and far from complete.

When first researching the MCP, I found most existing documentation was targeted at software developers with the relevant domain knowledge in protocols and abstraction layers. This document is aimed at Data Scientists (/developers) who, like myself, do not have this knowledge but want to gain a working understanding of the MCP. As models have become more complex, so have the business requests placed upon the applications they power.

Retrieval-Augmented Generation (RAG) pipelines are becoming a tool of the past. Applications integrated with live context from a wider range of sources are becoming the new normal, and the demand to build these tools increasingly falls not just on sophisticated software teams, but also on willing developers and model builders.

Overview

What is a “Protocol”?

To understand MCP it is useful to first understand what a protocol means. A protocol is a set of rules / standards that dictate how data should be sent or received. Common protocols you are likely to have interacted with are HTTP/HTTPS and SSH. These are simply a set of rules for what information needs to be included in a request sent and what you can expect to receive back.

The HTTP protocol, for example, expects a request to include headers, a method (GET/POST etc), a version, a path and optionally a body. The client can then expect a response from the server including a status code (4xx for client errors), a header and optionally a body returned.

Protocols are designed to standardise how data are sent and received to make building services that rely on sending and receiving data straight forward. If protocols didn’t exist, each server implementation might require different request formats, forcing clients to create custom code for each server they need to interact with.

MCP

Model Context Protocol is a protocol designed to handle the context injected into prompts. In our RAG tooling, this is generally pretty simple and open-source frameworks like LangChain make this easy. We start with a user query, feed this into a retrieval pipeline that finds relevant pieces of information from a number of documents and then inject this context into a prompt, along with the user query (plus some optional templating) and have the LLM return the answer to the user’s query from the provided context. This is effective for consistent documents stored in a structured graphdb or vectorstore, but fails quickly if we need to pull from other services.

If the user’s query would benefit from context stored in an external API and/or an SQL database, the RAG approach fails. We can access the additional context using function calls to connect to the required data, retrieve it and inject the context back into the prompt. This is the fundamental of agentic pipelines. However this solution is not scalable because each additional context source needs custom code to standardise the response from the resource. Like with HTTP, this is where MCP can come and provide a standard for how we handle requests and responses from these resources.

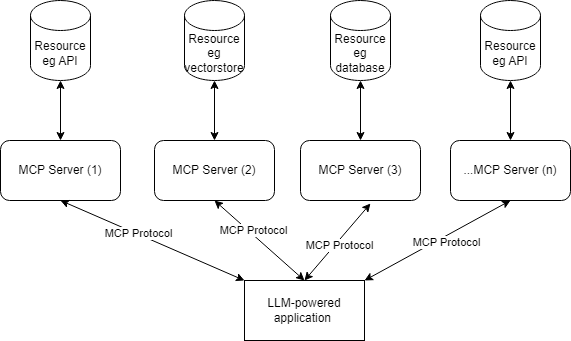

The diagram above demonstrates how the protocol works for an LLM application. The app communicates with a server by requesting context using the MCP Protocol. The server then goes to the relevant resource, gets the information and responds to the LLM application in a standardised way. This can then be fed into a prompt to provide additional context.

Pros and Cons

| Pros | Cons |

|---|---|

| Pull and inject context in a standardised manner, making integrations with existing and new resources easier. | Setup is more complex than a standard agent or RAG |

| Open-source, low code packages to support implementation Model Context Protocol | Technology is not yet fully integrated across the process lifecycle |

| Anthropic are bullish on the tech, OpenAI have created an integration | Debate around whether this technology has a long-term future. Setting up is a reasonable time commitment is a risk if the protocol isn’t adopted within the organisation or is pulled from future models |

Servers and Tools

“Servers” are just lightweight programs that can provide a capability (eg retrieving data) to be fed back to the model for context.

“Tools” are the functions that can be executed to create the context provided to the model.

A simple example would be a server that can add 2 numbers together and provide that context back to the model. While not the most complex example, and likely so simple that if only this was required the MCP protocol would likely not be needed, it is an easy way to demonstrate how to create the architecture.

First, lets create a function that can add two numbers and allow this to be executed

from mcp.server.fastmcp import FastMCP

# create a server

mcp = FastMCP(name="demo")

# create a tool

@mcp.tool(description="Add two numbers")

def add_numbers(a: float, b: float) -> float:

"""Add two numbers."""

return a + b

Then lets have a look at what’s actually going on under the hood here. Protocols are just structured data that we can tell our server to expect and what to do when receiving this. It can be helpful to visualise the structure:

import json

import inspect

def inspect_mcp_tool(tool_function):

sig = inspect.signature(tool_function)

doc = inspect.getdoc(tool_function)

parameters = {}

for name, param in sig.parameters.items():

if name == "self" or name == "cls":

continue

param_info = {

"type": str(param.annotation.__name__ if param.annotation != inspect.Parameter.empty else "any")

}

parameters[name] = param_info

tool_info = {

"name": tool_function.__name__,

"description": doc.split("\n\n")[0] if doc else "",

"parameters": {

"type": "object",

"properties": parameters,

"required": list(parameters.keys())

},

"returns": str(sig.return_annotation.__name__ if sig.return_annotation != inspect.Parameter.empty else "any"),

"is_async": inspect.iscoroutinefunction(tool_function)

}

return json.dumps(tool_info, indent=2)

print(inspect_mcp_tool(add_numbers))

And we will return

{

"name": "add_numbers",

"description": "Add two numbers.",

"parameters": {

"type": "object",

"properties": {

"a": {

"type": "float"

},

"b": {

"type": "float"

}

},

"required": [

"a",

"b"

]

},

"returns": "float",

"is_async": false

}

The tool structure includes a name, description, defined properties, and expected return type. This structured format can be passed to applications where LLMs can analyze it to select the most appropriate tool for a given task with the provided inputs. While our addition example is deliberately simple, this same approach enables more complex business logic to be presented clearly and consistently to models. For instance, the structured format helps guide models to select the correct data resource when multiple options exist, ensuring they retrieve information from the most relevant source based on well-defined parameters and descriptions.

It can be seen how if we had many functions that needed to be called, understanding exactly what is coming in and out makes it easier to build tools that use them together.

Now lets run our server

from mcp.server.fastmcp import FastMCP

# create a server

mcp = FastMCP(name="demo")

# create a tool

@mcp.tool(description="Add two numbers")

def add_numbers(a: float, b: float) -> float:

"""Add two numbers."""

return a + b

if __name__ == "__main__":

mcp.run(transport="stdio")

We can make the tool accessible by saving the above as server.py and running the following client. The below is amended from Anthropic’s own documentation to run the server but has discounted a live run. For a complete implementation see: For Client Developers - Model Context Protocol

from contextlib import AsyncExitStack

from typing import Optional

import asyncio

from mcp import ClientSession, StdioServerParameters

from mcp.client.stdio import stdio_client

class MCPClient:

def __init__(self):

self.session: Optional[ClientSession] = None

self.exit_stack = AsyncExitStack()

self.stdio = None

self.write = None

async def connect_to_server(self, server_script_path: str):

"""Connect to an MCP server

Args:

server_script_path: Path to the server script (.py or .js)

"""

command = "python"

server_params = StdioServerParameters(

command=command,

args=[server_script_path],

env=None

)

stdio_transport = await self.exit_stack.enter_async_context(stdio_client(server_params))

self.stdio, self.write = stdio_transport

self.session = await self.exit_stack.enter_async_context(ClientSession(self.stdio, self.write))

await self.session.initialize()

# List available tools

response = await self.session.list_tools()

tools = response.tools

print("\nConnected to server with tools:", [tool.name for tool in tools])

async def close(self):

"""Close the client connection"""

await self.exit_stack.aclose()

async def main():

client = MCPClient()

try:

await client.connect_to_server("server.py")

finally:

await client.close()

if __name__ == "__main__":

asyncio.run(main())

which should return our tool

# python client.py

[04/02/25 16:57:21] INFO Processing request of type ListToolsRequest server.py:534

Connected to server with tools: ['add_numbers']

What has happened here is we have created a function (or ‘tool’) and made it MCP compliant through an MCP server using the mcp package. Then we have allowed the server to run and connected a client to the server. This client can then see the tool and knows exactly what the tool does thanks to our naming and description, what the tool needs thanks to mcp generating the parameters, and knows what it will expect back. The client can then call the tool to enrich it’s context in a reliable manner.

Conclusion

As AI applications continue to evolve beyond simple RAG pipelines toward more sophisticated context integration, MCP represents a promising step forward in standardizing how models interact with diverse data sources. While still in its early adoption phase, the protocol offers data scientists and developers a structured approach to building more flexible and powerful LLM applications. Despite the initial setup complexity and uncertainty about widespread adoption, the potential benefits of standardized context handling are significant. For teams working on complex applications requiring multiple data sources, MCP provides an elegant solution that may well become industry standard as the ecosystem matures.

Appendix

Azure OpenAI MCP Integration blog